3 Messages

•

30 Points

Buy vs. Build: Gen AI edition!

Buy vs. build is the age-old question when it comes to software solutions. Should you build your own and reap the benefits of owning your own custom solution (along with the associated challenges), or should you invest in an external solution with a reputable vendor, not worry about supporting the solution but give up some customization?

Generative AI solutions bring up these same questions, with a mix of well documented pros and cons, along with a few key new dimensions to consider and focus on. Rather than reviewing every consideration in detail, let’s focus on some of the specific challenges that Gen AI brings to the table, that also apply to traditional software solutions, but are more acute in the context of Gen AI.

Some of the traditional trade offs to consider include short term and long-term implications regarding benefits, costs, support and maintainability, usability and adoption, scalability, integration, intellectual property and security. While all these dimensions need to be considered carefully in the selection of a buy vs build strategy, cost, scalability, intellectual property and security are particularly key when it comes to Generative AI.

Scalability: Consider whether the solution can scale as your business grows. When it comes to Generative AI solutions, the data needed to tune / train the models can be humongous. As such training your own model may be an unreasonable task to take on. Many open-source solutions exist today for users to start from, offering the benefit of a tuned more that can be fine-tuned for your needs. But is fine-tuning even achievable? Or should you consider leveraging techniques such as Retrieval Augmented Generation (RAG) only? And if so, does this approach constitute a custom solution or would that be an “off the shelf” solution?

What about future scalability of your models? Running your own LLMs locally or in a cloud (private or otherwise) may allow you to control scalability, but at what cost?

Intellectual Property (IP): The traditional IP questions exist with Gen AI just like any other software solution. Specifically, who owns any intellectual property related to the solution? But beyond the IP, we now have a bleed through challenge of the data used to train the models. Many vendors of Generative AI solutions (e.g. OpenAI, Mistral, etc.) offer solutions whereby they guarantee that your private data will not be used to improve their model’s performance – outside of your own separate and private instance. This raises a couple of key questions, from trust (do you trust the vendor to keep your data out of its models?) to performance (would the models not perform better if they were trained on your data? Can the vendor achieve similar performance levels without using your data for training purposes?).

Security: As always, security is a key consideration, and once again a more arduous concern than ever in the context of Generative AI. How secure is your data, will it / can it bleed into the vendor’s models surreptitiously? How do you make sure that you keep your – and your customers’ – data private and secure? These questions need to be evaluated very carefully when selecting an approach, but also should be consistently monitored as time goes on.

Cost: Generative AI is expensive, especially for training and usage. Gen AI commands very high compute costs, particularly for training stages of the models, but also to evaluate and produce answers. While these costs have been decreasing, the overall cost of training and running these models remains very high, as does the cost of running these models, particularly as adoption and usage increase. CNBC (March 2023) provides some illustration of these costs here.

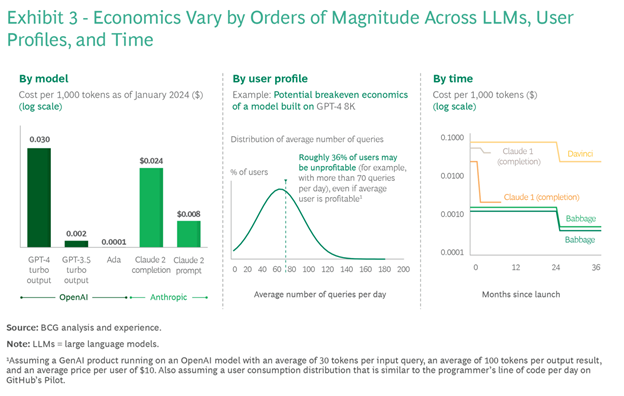

BCG (February 2024) analyzed the cost per 1,000 tokens as shown in the chart below (source), and highlights how costs have decreased over time, but also how vastly different they can be as a function of the model, and how quickly these models can become unprofitable depending on usage.

So, what’s our approach at PROS? Well, we have been building in-house solutions for almost 40 years, and we will continue to do so, but we also recognize the importance of balancing fully custom and external solutions where it makes sense. For now, we are evaluating all options, which means building models that leverage existing technologies such as Azure OpenAI and running our own Generative AI models locally to compare the performance. We are also leverage off the shelf solutions where we feel it makes the most sense. As we continue to learn and refine our understanding of these models, we will continue to adjust our internal strategy.

What’s your approach? What have you learned on your AI journey?

No Responses!